Facebook forced to DENY insane 10 Year Challenge conspiracy

If you've been on your social media accounts recently, you most likely can't avoid the new fad; the Ten Year Challenge.

Celebrities from Mariah Carey, Trevor Noah, Amy Schumer to Caitlyn Jenner participated in the glow up experiment, with most famous faces simply proving how freakishly ageless they are.

Some participants brought humour into the fray, paralleling their image with one of another look-alike celebrity, or in Jenner's case, changing gender over the last 10 years.

The concept of then-and-now images isn't exactly new, but it's gained massive traction over the last week. What harm could it be?

Kate O'Neill of Wired magazine introduced a new notion which essentially blew our minds, and even forced Facebook to deny her semi-sarcastic suggestion.

Her idea? That the 10 Year Challenge could be useful to any entity that’s looking to develop facial recognition algorithms about ageing.

Me 10 years ago: probably would have played along with the profile picture aging meme going around on Facebook and Instagram

Me now: ponders how all this data could be mined to train facial recognition algorithms on age progression and age recognition— Kate O'Neill (@kateo) January 12, 2019

O'Neill flipped a metaphorical table by suggesting the tech giant had initiated a trend solely to contract facial recognition data from the social network's users.

In her article, Facebook's 10 Year Challenge Is Just A Harmless Meme- Right?, she claims;

"I knew the facial recognition scenario was broadly plausible and indicative of a trend that people should be aware of. It’s worth considering the depth and breadth of the personal data we share without reservations."

Allegedly, the conspiracy translates to Facebook needing to experiment with data, and the meme proving the perfect way to achieve it.

Like most emerging technology, facial recognition's potential is mostly mundane: age recognition is probably most useful for targeted advertising. But also like most tech, there are chances of fraught consequences: it could someday factor into insurance assessment and healthcare.

— Kate O'Neill (@kateo) January 13, 2019

"Imagine that you wanted to train a facial recognition algorithm on age-related characteristics and, more specifically, on age progression (e.g., how people are likely to look as they get older)," she added.

"Ideally, you'd want a broad and rigorous dataset with lots of people's pictures. It would help if you knew they were taken a fixed number of years apart—say, 10 years." WHAT.

O'Neill is saying that the powerful technology company could use the algorithm for advertising, insurance assessment, healthcare and finding missing children. Both positive but simultaneously dangerous consequences.

Of course, this is all total speculation, unsubstantiated evidence. Yet Facebook was forced to dispel the rumours:

The 10 year challenge is a user-generated meme that started on its own, without our involvement. It’s evidence of the fun people have on Facebook, and that’s it.

— Facebook (@facebook) January 16, 2019

Do we place too much trust in sites like Facebook? Even if the challenge isn't a case of social engineering, the website has come under fire following numerous controversial claims against them.

Examples of social games designed to extract data aren't far from reality, let's cast our minds back to the Cambridge Analytica scandal.

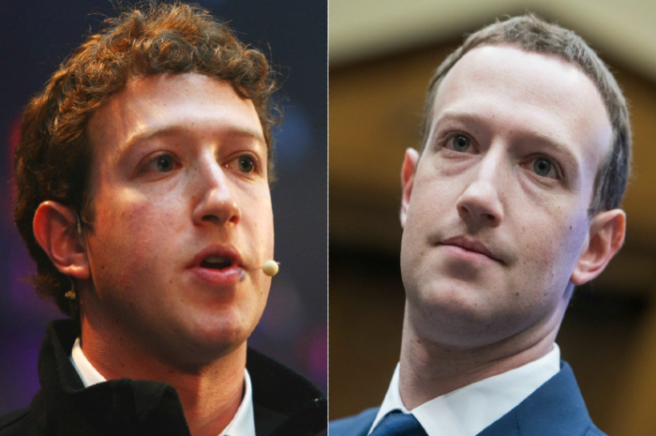

The mass data extraction of over 70 million American Facebook users was performed, and rocked the country so much that Mark Zuckerberg himself had to turn up to Congress.

Another aspect of the website which garners negative attention is their suspicious community guidelines which seem to apply more rigidly to certain types of people.

Let's face it, Facebook is already heavily involved in politics, such as the critical 2016 US Presidential election and Russian interference.

JUST IN: Facebook says it has removed 364 pages and accounts related to coordinated inauthentic behavior from Russia – blog $FB pic.twitter.com/cBvBnci9sG

— Reuters Business (@ReutersBiz) January 17, 2019

According to Kate O'Neill, major tech corporations acquiring data could be used for population control and law-and-order;

"After Amazon introduced real-time facial recognition services in late 2016, they began selling those services to law enforcement and government agencies, such as the police departments in Orlando and Washington County, Oregon."

"But the technology raises major privacy concerns; the police could use the technology not only to track people who are suspected of having committed crimes, but also people who are not committing crimes, such as protesters and others whom the police deem a nuisance," she continued.

Facebook's implication in various privacy concerns has created a tumultuous relationship between the tech giant and its users.

O'Neill is definitely right about one thing- data is one of the most powerful currencies, so don't spend it dangerously.

“Regardless of the origin or intent behind this meme, we must all become savvier about the data we create and share, the access we grant to it, and the implications for its use."

Feature image credit: Mamamia